INTRODUCTION

One of the inescapable features of the modern technical landscape is the ubiquity with which human-machine interactions occur. In warfighting, these interactions are often characterized by huge benefits and grave consequences, placing strain on the critical relationship between man and machine. This has necessitated a framework shift from machines as tools to machines as peers. Illustrating this point, Lange et al. stated that “the most critical aspect of automation is not the engineering behind the automation itself, but the interaction between any automation and the operator who is expected to work together with it” [1]. Military professionals and academics have identified a lack of trust as a critical challenge to human-machine teaming [2, 3]. A study of team learning in a military context found, “Interpersonal trust is especially important when team tasks require considerable collaboration and team situations entail risk, uncertainty and vulnerability” [4]. Obstacles to trust must be navigated and overcome to achieve even a base level of effectiveness in human-machine teams.

Enhancing interaction and trust with autonomous systems is a core mission of the U.S. Air Force [5]. The U.S. Department of Defense is already applying machines to vastly different teams—from drone swarms for reconnaissance (Figure 1) to explosive ordinance disposal (Figure 2). However, the Air Force has identified key technical challenges that include a “lack of robust and reliable natural language interfaces…fragile cognitive models and architectures for autonomous agents and synthetic teammates…[and an] insufficient degree of trust calibration and transparency of system autonomy” [5]. Ultimately, a requisite level of trust can be severely impaired by all three of these challenges—communication, comprehension, and control. Each of these will be discussed in detail, along with suggested improvements to increase trust.

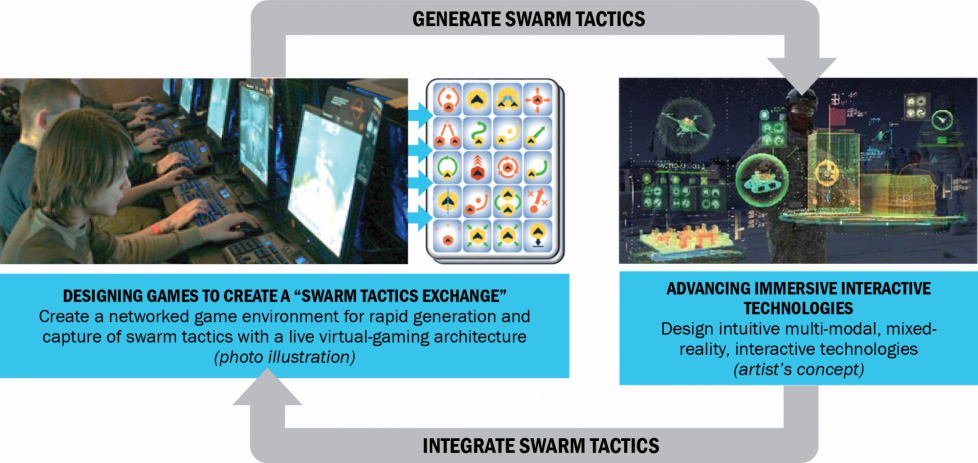

Figure 1: Defense Advanced Research Projects Agency’s (DARPA’s) OFFensive Swarm-Enabled

Tactics Program Envisions Future Small-Unit Infantry Forces Using Small Unmanned Systems in Swarms of 250 Robots or More (Source: DARPA).

COORDINATION

At the center of team coordination and success is communication [6]. In covert, high-stakes military combat missions, communication becomes crucial. Unfortunately, communication among human-machine teams is not yet as technically efficient or effective as human-human or machine-machine teams [7]. The U.S. Air Force Research Laboratory’s vision for human-machine teams involves transitioning from a tool-user framework to a peer-peer framework, with a shared common language and understanding [8]. Two-way, peer-to-peer communication is at the center of trusting relationships, including human-machine teams [9]. There are three primary approaches to improving human-machine communication: (1) reducing translation, (2) encouraging useful member feedback, and (3) establishing common ground.

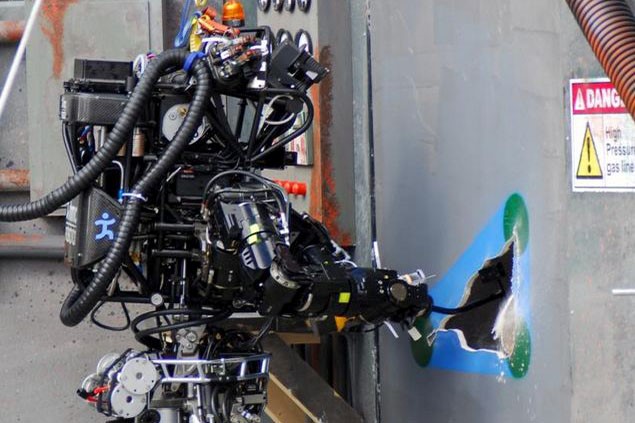

Figure 2: A Navy Explosive Ordnance Disposal Technician Conducts Counter Improvised Explosive Device Training

With a Robot During Cobra Gold 2016 in Thailand, 17 February 2016 (Source: U.S. Navy Petty Officer 2nd Class Daniel Rolston).

A tool-user framework generally necessitates translating commands into the native language of the autonomous system through code, joystick commands, or simple phrases. To foster human-machine trust, however, personnel and machines must be peers who communicate in real-time through a common language. In a military operation, there is no time for personnel to translate inputs to a machine and interpret complex machine outputs. The mental burden of translating and interpreting orders among various human-machine members is too great a task; the mental load must shift to the machines to be effective. For example, the Atlas robot in Figure 3 must perform routine tasks such as cutting through a wall without direct personnel guidance. Autonomous systems must understand, interpret, and reply to personnel in a way that requires no lengthy translation.

It is also imperative that machines provide feedback to personnel that is easily understood and useful. In efficient teams, members “self-repair.” They identify, clarify, and recover from potential miscommunications. A 2016 study of effective team collaboration found that “teams that used more self-repairs may have been able to minimize collaborative effort by clarifying and adjusting their utterances to their partner’s perspective” [6]. Kozlowski et al. suggest that such feedback mechanisms be considered early in an autonomous system’s design process, allowing engineering design teams to incorporate communication concerns in parallel with other system goals [10]. By including such social constructs in artificial intelligence, human-machine teaming will more closely resemble human-human teams and increase collaboration and trust.

Figure 3: An Atlas Robot Is Hard at Work During the Second Round of DARPA’s Robotics Challenge in 2013 (Source: DARPA).

Gervits et al. found that the most important factor of team collaboration was “the ability to efficiently establish and maintain common ground with one’s teammate through task-oriented dialog” [6]. Effective directors accomplish this by supplying information for team members to confirm. By establishing common ground, all parties ensure they are heard and understood. This requires members be given the ability to frame questions and information through their different perspectives, posing a unique challenge to machines as they do not intrinsically understand differences in human perspective and behavior. Artificial intelligence should include considerations for building rapport and common ground with human team members from a myriad of backgrounds.

Transferring the burden of translation from personnel to machine will greatly improve the efficiency of human-machine communication. For personnel to believe that this improved communication is accurate, however, machines must also provide feedback and establish common ground. This, in turn, will increase trust between team members. Such social developments in artificial intelligence must be considered and incorporated in human-machine teams. Ultimately, “People will trust AI systems when systems know users’ intents and priorities, explain their reasoning, learn from mistakes, and can be independently certified” [11].

COMPREHENSION

Another result of the paradigm shift from user-tool to peer-peer is the need for autonomous systems. Increasing the autonomy and number of machine members creates comprehension challenges to human and machine members. This asymmetric comprehension adversely impacts trust—how can personnel trust something that they neither understand nor are understood by? According to Lewis, “The asymmetry between what we can command and what we can comprehend has been growing” [12]. Personnel must grasp the way artificial intelligence operates while trusting the machine’s ability to perform assigned tasks. Artificial intelligence must understand complex environments and incorporate collaborative and combative frameworks, allowing teams to consider members’ weaknesses and focus on their strengths.

Combining different perspectives and their effects on outcomes should be evaluated and incorporated into autonomous methodologies [10]. Veestraeten et al. studied team-learning behaviors in a military context and found that a “cognitive awareness of who knows what allows team members to coordinate knowledge, tasks, and responsibilities in an organised and efficient way” [4]. It is important that personnel can conceive with reasonable accuracy what machine team members know and act upon. In special operations missions, specialized and individual roles are extremely well-defined. For example, while clearing a building, the point man must know and trust that the other team members are monitoring their respective zones of awareness, freeing him or her to focus entirely on individual roles in the mission. This is also true in human-machine teams. Personnel must focus on their own tasks and trust that autonomous systems will perform as expected. This can be accomplished by a two-fold approach. First, autonomous systems should incorporate and encourage a reciprocal cognitive awareness among team members without overburdening personnel. They can do this through better communication and artificial intelligence that incorporates the socially complex aspects of the military team. Second, personnel can be trained with machine team members. It is important that personnel understand autonomous decision-making criteria and predict how autonomous systems should behave. By increasing the exposure and experience of personnel with machines, their understanding and cognitive awareness of each other will improve.

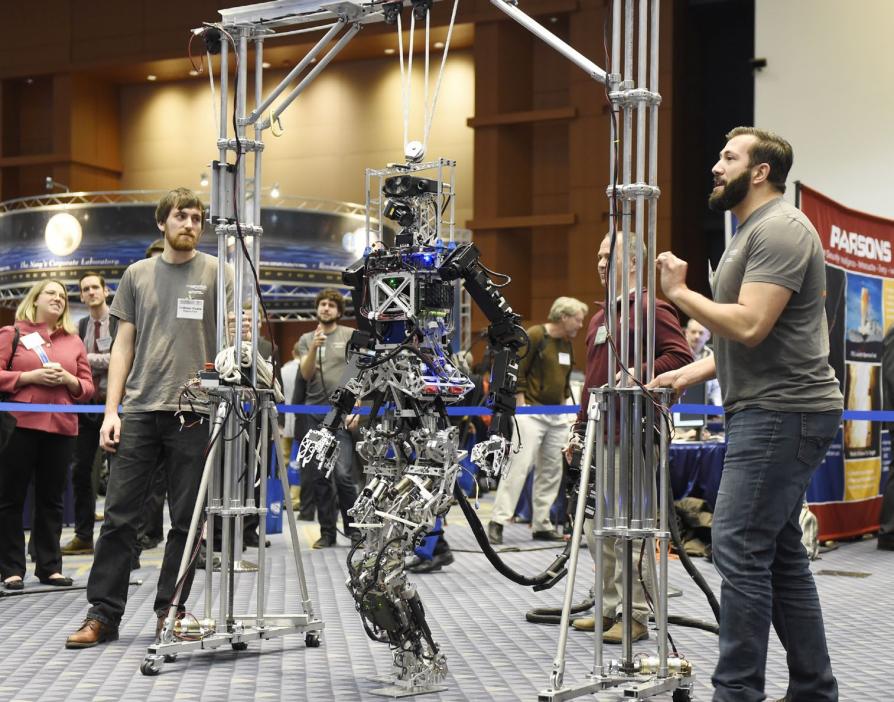

Improving a machine member’s comprehension of movement, environments, and crowd behavior will also increase the efficiency of human-machine teams. The challenge of dense crowd maneuvering was studied by Trautman et al. by proposing incorporating crowd cooperation into the AI’s decision algorithms [13]. They found that machines, when presented with a dense crowd of people, either took a highly inefficient, evasive path or froze altogether due to decision paralysis. The algorithm change incorporated a probabilistic predictive model that assumed other participants would cooperate to avoid collision with the robot. When the model also included multiple goals and stochastic movement duration, they found that it performed similarly to human teleoperators with a crowd density of 0.8 humans per square meter. Conversely, models that did not consider cooperation were 3x as likely to exhibit unsafe behavior. When generalizing to combat situations or hazards in unexpected environments, however, much more work in machine comprehension is needed. This is critical for systems such as the Shipboard Autonomous Firefighting Robot (SAFFiR) (Figure 4), which will most likely need to navigate dense crowds fleeing from the scene of the very fires it must extinguish. By including collaborate and combative assumptions, artificial intelligence can better understand and navigate complex environments.

Figure 4: Graduate Students From Virginia Tech Demonstrate the Capabilities of the Office of Naval Research-Sponsored SAFFiR in the

Exhibit Hall During the Naval Future Force Science and Technology Expo (Source: U.S. Navy, John F. Williams).

One of the greatest benefits of human-machine teams is integrating a machine’s ability to perform computations far more quickly and accurately than humans. This could be used in a plethora of ways, including the arduous tasks of spatial reasoning and coordinating transformations. The National Aeronautics and Space Administration developed a spatial reasoning agent as part of their early Human-Robot Interaction Operative System [14]. The agent creates a mental simulation of an interaction and assigns it multiple frames of reference. Information is then referenced by frame and location, and the desired perspective is assigned to the world, allowing the spatial reasoning agent to connect local and global coordinates of an interaction. By assigning spatial reasoning tasks to machines, personnel can coordinate among their own reference points and let the machines do the work of translating to all team members. Studies should be conducted to construct teams in a way that highlights each member’s strengths and accounts for their weaknesses.

Government and industry are working on explaining the varying levels of comprehension and capabilities. In 2016, DARPA created the Agile Teams program, which focuses on developing mathematical models that enable the optimization of human-machine teams. The model will be generalized, allowing the input of team specifics that would result in applicable abstractions, algorithms, and architectures. The goal is to create a new teaming methodology that will “dynamically mitigate gaps in ability, improve team decision making, and accelerate realization of collective goals” [15]. This will improve the control of human-machine teams by ensuring the team structure is most efficient and realistic.

One of the greatest benefits of human-machine teams is integrating a machine’s ability to perform computations far more quickly and accurately than humans.

Human-machine trust and efficiency will continue to improve as comprehension and experience increase. Division of roles and transparency in decision making is critical for personnel to understand autonomous team members. Personnel must understand artificial intelligence and assigned roles. As artificial intelligence improves and accounts for complex environments, trust will also improve. As IBM summarized, “To reap the societal benefits of AI, we will first need to trust AI. That trust will be earned through experience, of course, in the same way we learn to trust that an ATM will register a deposit, or that an automobile will stop when the brake is applied. Put simply, we trust things that behave as we expect them to” [11]. After this trust is established, teams can be constructed to capitalize on the benefits provided by machine members.

CONTROL

Human-machine teams require that machine members operate with a high level of autonomy. However, personnel must retain a certain level of control for ethical considerations and team effectiveness. A study conducted by the Florida Institute for Human and Machine Cognition found that “increases in autonomy may eventually lead to degradations in performance when the conditions that enable effective management of interdependence among the team members are neglected” [16]. They emphasized that while autonomy is required, machines must also remain somewhat dependent and collaborate and participate in interdependent joint activity. Thus, there is a fine balance to achieve—too much control reduces team efficiency, while too little control increases the potential for error. There are three ways that control can be addressed: (1) new command techniques, (2) error checking artificial intelligence, and (3) continued oversight.

Figure 5: OFFSET Approach to Command Interfaces and Training (Source: DARPA).

To maintain efficient teams while increasing the autonomy of machine members, better control interfaces must be established. Lange et al., with the Space and Naval Warfare Systems Center, stated that while autonomy is needed for very large teams, “autonomic strategies must also be made more adaptable and in doing so also maintain the property of being recognizable by a commander” [1]. DARPA’s OFFensive Swarm- Enabled Tactics (OFFSET) program attempts to address this challenge by combining new communication techniques and virtual reality training (Figure 5). Because personnel will need to monitor and direct a large swarm of unmanned systems, the program is developing “rapidly emerging immersive and intuitive interactive technologies (e.g., augmented and virtual reality, voice-, gesture-, and touch-based) to create a novel command interface with immersive situational awareness and decision presentation capabilities” [17]. The Office of Naval Research’s Battlespace Exploitation of Mix Reality Laboratory is already seeking to apply commercial-mixed reality, virtual reality, and augmented reality technologies (Figure 6); the scope could also be widened to include autonomous systems. By focusing on ease of communication and virtual reality immersion, controlling a swarm might be much more intuitive, reducing personnel’s mental workload.

Figure 6: Navy Lt. Jeff Kee Explores the Office of Naval Research-Sponsored Battlespace Exploitation of Mixed Reality Laboratory at

the Space and Naval Warfare Systems Center Pacific, San Diego, CA, 14 September 2015 (Source: U.S. Navy, John F. Williams).

Humans excel at making decisions on limited data and sense when data has been compromised. Artificial intelligence must mimic this decision-making and real-time data analysis with extremely large data sets. As IBM reported, “Training and test data can be biased, incomplete, or maliciously compromised. Significant effort should be devoted to techniques for measuring entropy of datasets, validating the quality and integrity of data, and for making AI systems more objective, resilient, and accurate” [11]. This will allow machine team members to remain autonomous when gathering data and performing calculations. By passing off data responsibilities and error checking to machine members, personnel can focus their control on those truly critical elements.

As there is a significant gap between human inherent understanding of the environment, test data, and human behavior, there must still be an element of personnel oversight and control. The U.S. Army Research Laboratory found that too much trust could pose a danger. According to Schaefer et al., “An over-trusting operator is more likely to become complacent and follow the suggestions of the automation without cross-checking the validity against other available and accessible information” [18]. More efficient human-machine teams require increased levels of trust. However, personnel must still actively manage machine team members when necessary. Hancock et al. characterized a complete lack of control as neglect and found that “neglect tolerance should be appropriate to the capabilities of the robot and the level of human-robot trust” [19]. It is important that personnel be reminded of the capabilities and shortcomings of machine members. Machines must remain dependent on personnel, and personnel must retain a certain level of distrust. This continued oversight and interdependence will strengthen the bonds between man and machine and prevent dangerous complacency.

As artificial intelligence improves, machines will use compromised and limited data to make decisions comparable to their human peers.

This tension between autonomy and control must exist to have highly effective human-machine teams where personnel still retain ultimate control. Virtual reality and improved interfaces make controlling autonomous systems and human-machine teams easier. As artificial intelligence improves, machines will use compromised and limited data to make decisions comparable to their human peers. For practical and ethical reasons, the ultimate responsibility for actions taken by the team must still rest with a human leader. Relinquishing too much control results in a team that may be ineffective and possibly dangerous. Effective human-machine teams reside in this balance between autonomy and interdependence.

CONCLUSION

Significant advances are being made in artificial intelligence, autonomy, and human-machine teaming. At the heart of effective teams, however, are interpersonal relationships built on understanding and trust. For human-machine teams to overcome the barriers of communication, comprehension, and control, more work is needed. Through improving communication, feedback, and understanding common ground, coordination among team members will improve. Asymmetrical comprehension inherent in autonomy necessitates a deeper understanding of decision making, roles, and human-machine team frameworks. Finally, a balance must be found when controlling autonomous team members. Control interfaces must be more efficient and natural to allow machines to control certain areas. However, maintaining control and challenging complacency are required to avoid mistakes when autonomous systems operate outside their design. By continuously tweaking these areas for efficiency, human-machine teams will not only exist but thrive.

References:

- Reeder. “Command and Control of Teams of Autonomous Units.” Presented at the 17th International Command and Control Research and Technology Symposium, Space and Naval Warfare Systems Center, San Diego, CA, June 2012.

- DeLaughter, J. “Building Better Trust Between Humans and Machines.” MIT News, 21 June 2016.

- Gillis, J. “Warfighter Trust in Autonomy.” DSIAC Journal, vol. 4, no. 4, 2017.

- Veestraeten, M., E. Kyndt, and F. Dochy. “Investigating Team Learning in a Military Context.” Vocations and Learning, vol. 7, issue 1, pp. 75–100, April 2014.

- Tangney, J., P. Mason, L. Allender, K. Geiss, M. Sams, and D. Tamilio. “Human Systems Roadmap Review.” Technical Report DCN# 43-1322-16, Office of Naval Research, Arlington, VA, February 2016.

- Gervits, F., K. Eberhard, and M. Scheutz. “Team Communication as a Collaborative Process.” Frontiers in Robotics and AI, vol. 3, article 62, October 2016.

- MacDonald, M. “Human-Machine Teams Need a Little Trust to Work.” AIAA Communications, 11 January 2018.

- Overholt, J., and K. Kearns. “Air Force Research Laboratory Autonomy Science & Technology Strategy.” 88ABW-2014-1504, U.S. Air Force Research Laboratory, 711 HPW/XPO, April 2014.

- Lockheed Martin. “Machines Evolve to Help, But Not Replace, Humans.” Washington Post WP Brand Studio, October 2015.

- Kozlowski, S. W. J., J. A. Grand, S. K. Baard, and M. Pearce. “Teams, Teamwork, and Team Effectiveness: Implications for Human Systems Integration.” In D. Boehm-Davis, F. Durso, and J. Lee (Eds.), The Handbook of Human Systems Integration, pp. 555–571, Washington, D.C.: American Psychological Association.

- IBM. “Preparing for the Future of Artificial Intelligence.” IBM Response to the White House Office of Science and Technology Policy’s Request for Information, 2016.

- Lewis, M. “Human Interaction with Multiple Remote Robots.” Reviews of Human Factors and Ergonomics, vol. 9, ch. 4, pp. 131–174, 2013.

- Trautman, P., J. Ma, R. M. Murray, and A. Krause. “Robot Navigation in Dense Human Crowds: Statistical Models and Experimental Studies of Human-Robot Cooperation.” The International Journal of Robotics Research, vol. 34, no. 3, pp. 335–354, 2015.

- Fong, T., L. M. Hiatt, C. Kunz, and M. Bugajska. “The Human-Robot Interaction Operating System.” In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, pp. 41–48, Salt Lake City, UT: Association for Computing Machinery, 2016.

- Defense Advanced Research Projects Agency. “Designing Agile Human-Machine Teams.” Program Information, 28 November 2016, https://www.darpa.mil/ program/2016-11-28, accessed 10 April 2018.

- Allen, J. “Process Integrated Mechanism for Human- Computer Collaboration and Coordination.” AFRL-OSR-VA-TR-2012-1071, The Florida Institute for Human and Machine Cognition, Pensacola, FL, December 2012.

- Chung, T. “OFFensive Swarm-Enabled Tactics (OFFSET).” Defense Advanced Research Projects Agency, Program Information, https://www.darpa.mil/ program/2016-11-28, accessed 17 April 2018.

- Schaefer, K., D. Billings, J. Szalma, J. Adams, T. Sanders, J. Chen, and P. Hancock. “A Meta-Analysis of Factors Influencing the Development of Trust in Automation: Implications for Human-Robot Interaction.” ARL-TR-6984, U.S. Army Research Laboratory, Aberdeen Proving Ground, MD, July 2014.

- Hancock, P., D. Billings, K. Oleson, J. Chen, E. De Visser, and R. Parasuraman. “A Meta-Analysis of Factors Influencing the Development of Human-Robot Trust.” ARL-TR-5857, U. S. Army Research Laboratory, Aberdeen Proving Ground, MD, December 2011.